Autonomous Pick and Place Robot Arm

As this is a project from work, I will not go in depth into the technical details, and will only showcase the end results.

Project Description

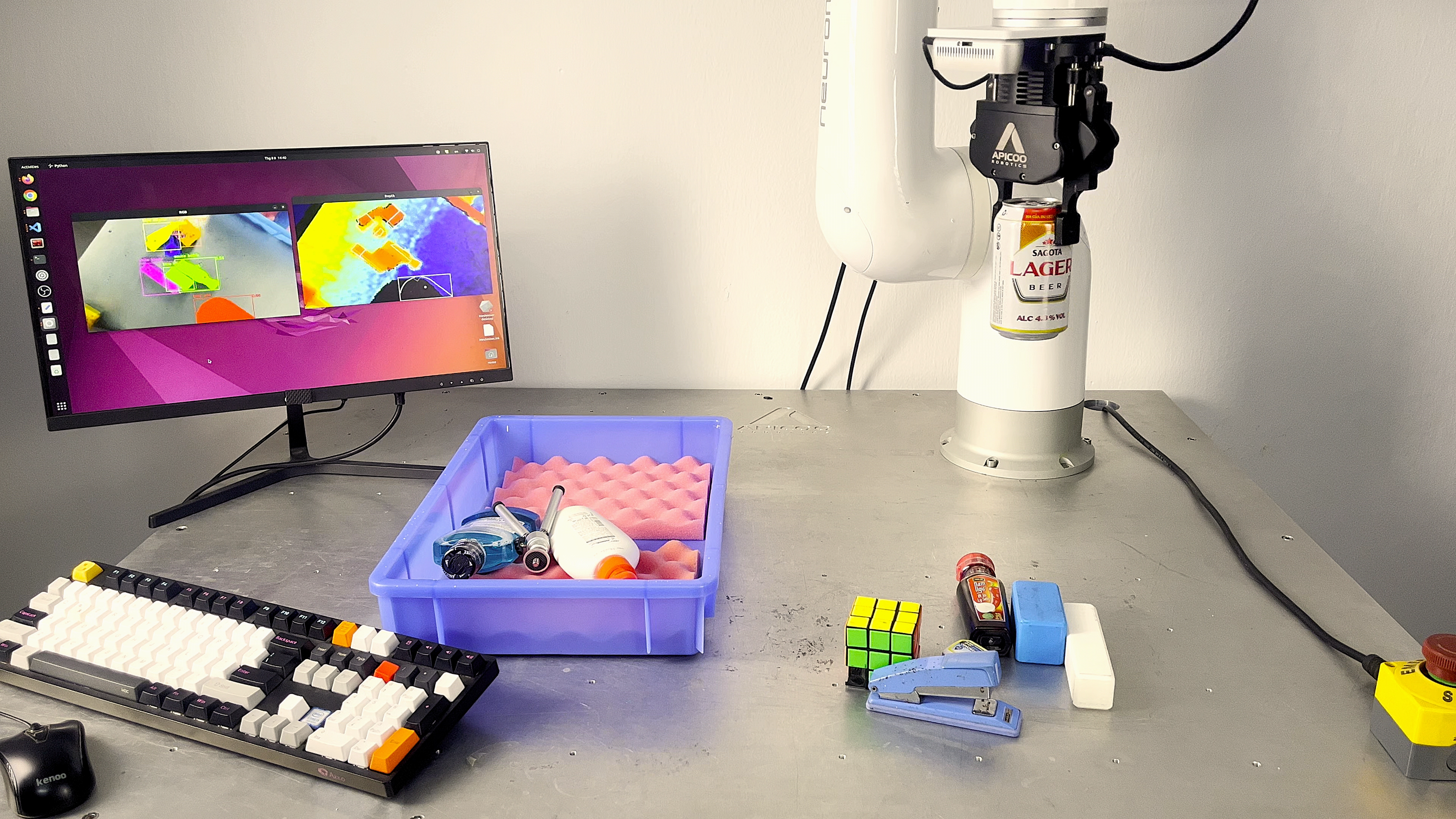

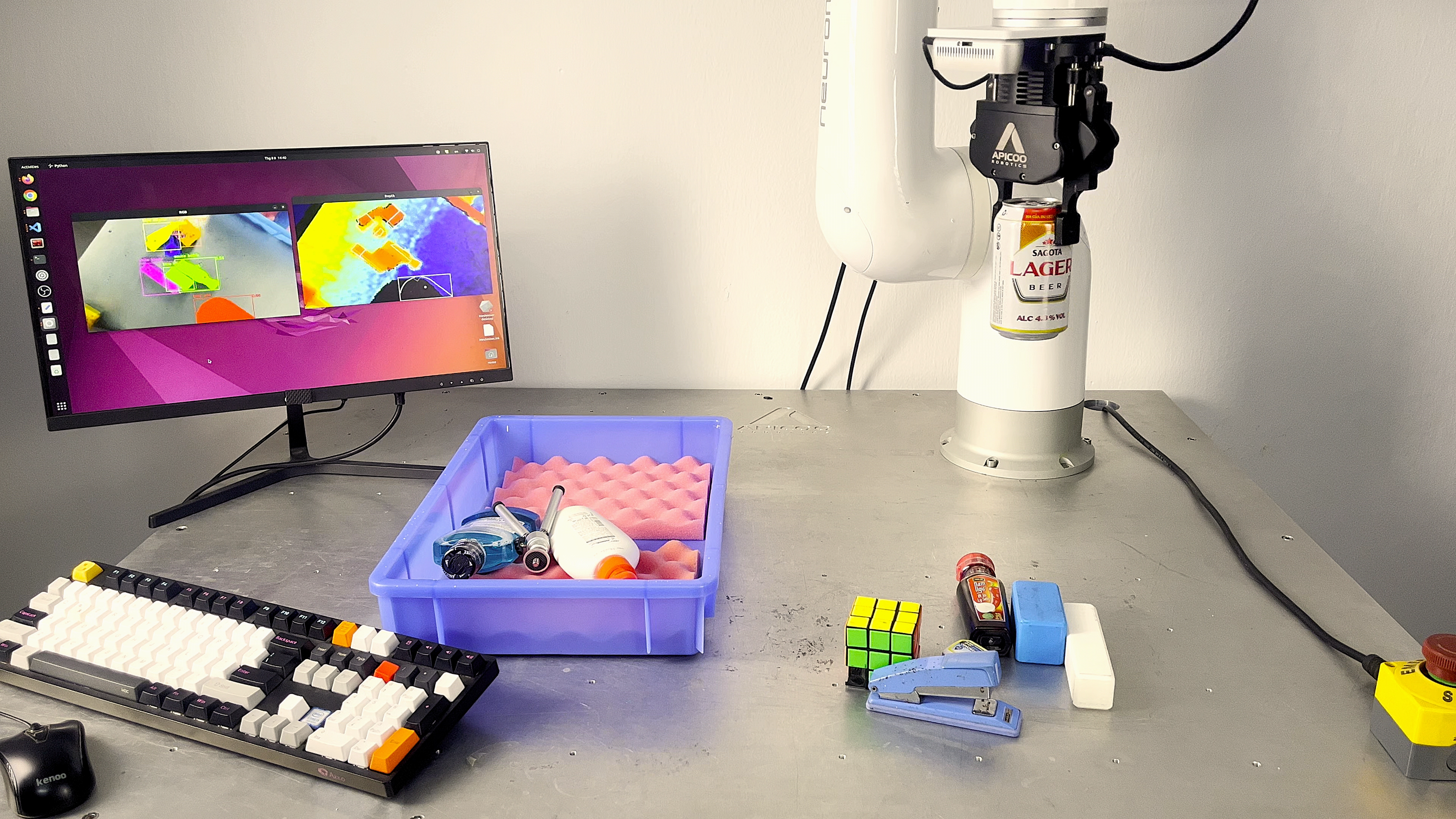

This was a project I worked on for about 6 weeks during my 3-month internship in Apicoo Robotics over the summer of 2025.

Apicoo Robotics produces the SusGrip line of smart grippers, offering plug-and-play grasping/manipulation solutions for users of collaborative robots.

The company has a long-term goal of providing a turnkey solution for autonomous pick-and-place tasks using a single gripper with integrated camera and compute hardware. In other words, the ideal product is to allow the user to simply install the gripper onto a robot arm, connect it to some controller, and with a few clicks/text input, allow it to perform any custom pick-and-place task.

Of course, it would not be possible to achieve this entire vision during my internship. Thus, my goal was to simply create an initial prototype showcasing this process without the hardware and computing power restrictions. This could then serve as a baseline for future improvements.

Problem Breakdown

This was a very broad problem, with a wide variety of approaches that I could try, from using traditional computer vision and control, to using end-to-end Vision-Language-Action models.

However, to keep thing simple, I broke it down into 4 smaller sub-problems:

- Object Detection: Classify (and locate) objects that the camera sees to allow for sorting of objects into their respective classes.

- Instance Segmentation: Get pixel accurate masks of the objects to determine the object’s actual shape.

- Scene Reconstruction: Convert objects’ positions and shapes into real-world coordinates/point clouds.

- Trajectory Planning: Deriving the actual motion of the robot arm, e.g. grasping position, orientation, picking order, etc.

In reality, each of these problems have their own complex considerations as well but I stuck to my pre-defined plan of creating the simplest solutions to get a working prototype first before trying to improve it.

Video Demo

The completed demonstration shows the user sending a text string of the objects in the scene to a Vision-Language model, which identifies and segment the objects in the scene using an RGB-D camera. With the depth data, an algorithm determines the next object to pick up by weighing various metrics such as the objects’ heights, sizes, closeness to other objects, etc.

For each object, a grasping point is also calculated based on its surrounding objects to avoid any collisions with the gripper.

← Back to projects