Reinforcement Learning with Humanoid Robots

As this is a project from work, I will not go in depth into the technical details, and will only showcase the end results.

Project Description

This was a project I worked on for about 4-5 weeks during my 3-month internship in Apicoo Robotics over the summer of 2025.

My task was to use reinforcement learning (RL) to train policies for the humanoid robot to walk in NVIDIA Isaac Lab. While this would not be the policy used on the final humanoid, the key purposes of this project was to:

- Perform basic validation on the feasibility of the robot’s design

- Build up Apicoo Robotics’ knowledge about RL and using NVIDIA Isaac Lab

- Possibly explore implementation of RL in other contexts e.g. grasping policies for automated pick-and-place solutions

RL with Isaac Lab

Simulation Setup

As this was just an initial investigation into RL with the humanoid, the model was kept simple (standard MLP), with the observation space being proprioceptive data only i.e. joint angles, body velocities, etc., and the action space being the desired joint angles.

The simulation environment was also setup to have a higher chance of successful sim2real transfer by matching the real world as closely as possible, and implementing domain randomisation.

For the former, the actuator models used were matched accurately to the actual motor’s rated specifications. The stiffness and damping of each joint’s actuator was also tuned to have a critically damped response, which matched the real-world actuator’s responsiveness.

For the latter, domain randomisation was added at all aspects. Random uniform noise was added to the observation space, with varying ranges depending on the estimated real-world noise its associated sensor produces. Small deviations were also introduced to various physical aspects e.g. link masses, joint frictions, surface frictions, etc., by scaling each attribute by a random factor. These randomisations helped produce a more robustly trained policy that should be able to operate in real-life noisy conditions.

Training Policies

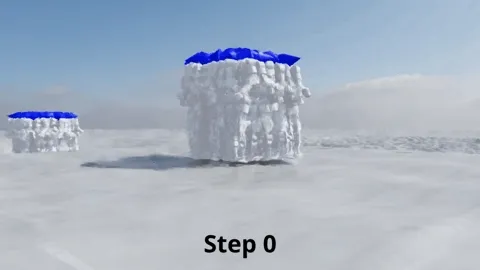

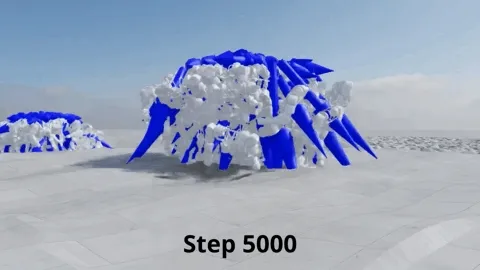

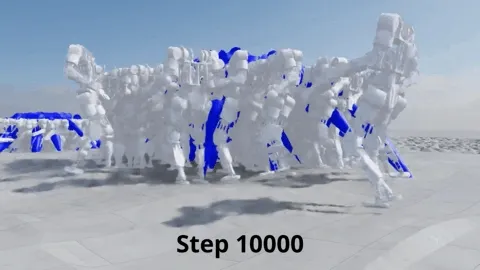

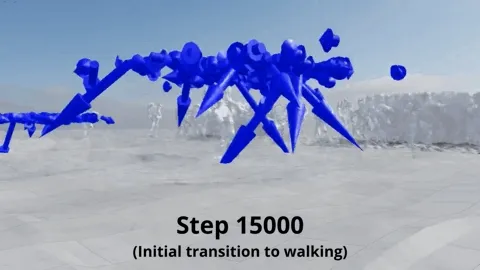

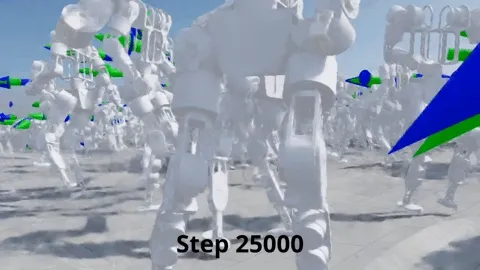

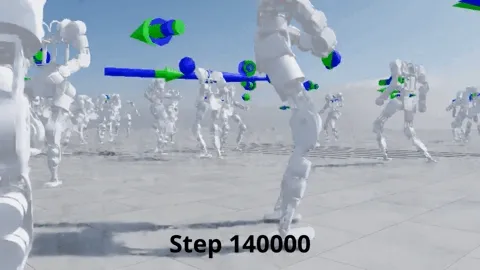

To successfully train a walking policy, a curriculum of increasing complexity was implemented.

A simpler standing policy was first trained by omitting the target velocity and heavily rewarding the agent for simply remaining upright without falling.

Once the agents learnt to stand with decent success, a target velocity was added with the rewards gradually skewing towards matching the target velocity rather than simply surviving. This encouraged the agents to learn to turn and walk omnidirectionally by being rewarded to follow a target linear and angular velocity.

After the agents could walk reliably, additional behaviours such as disturbance rejection and higher walking speeds were trained.

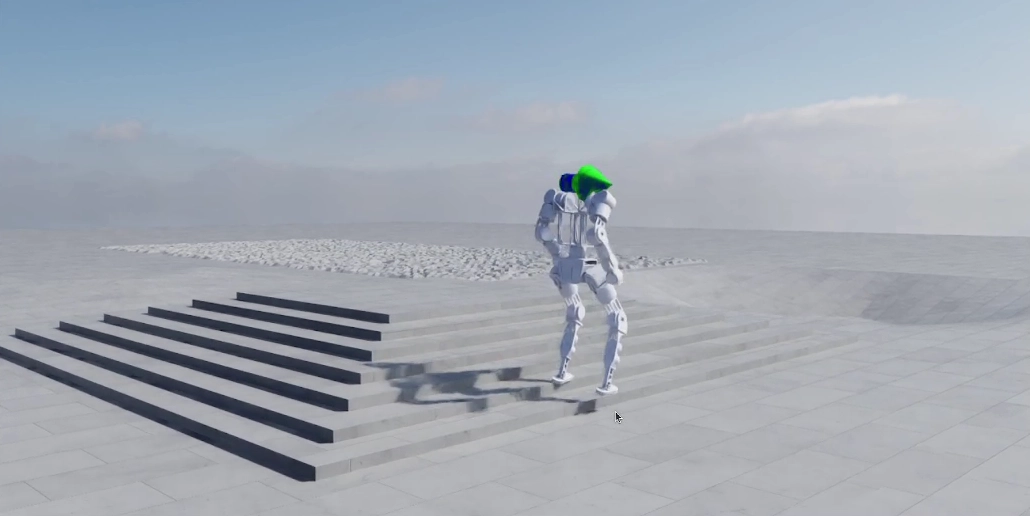

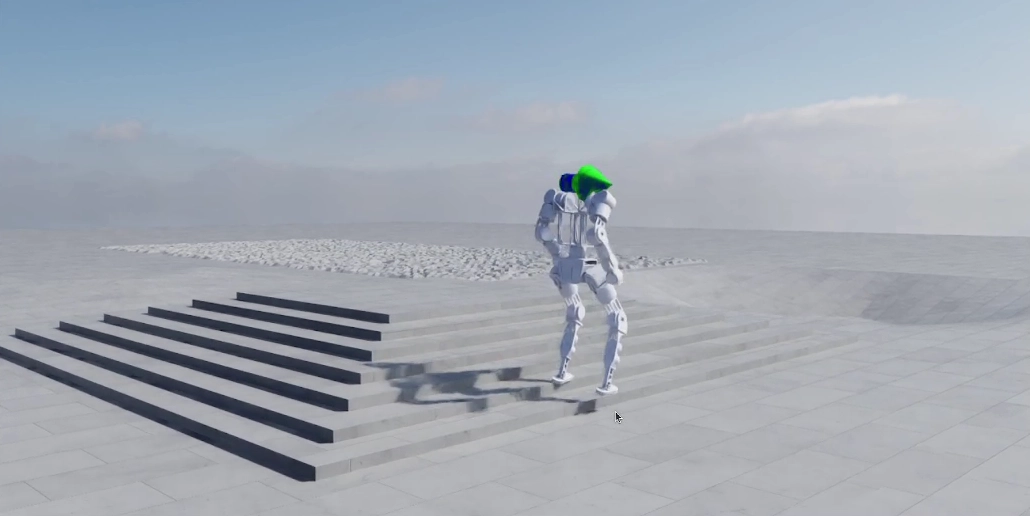

The videos below show the curriculum progression in more detail and the final successfully trained policies.

Challenges Faced

The main challenge (apart from the fact that RL was new to me) was trying to tune the reward function to suppress various forms of undesired emergent behaviour. With the high dimensional action space, there were many different ways the agent could converge on suboptimal policies.

Some examples of odd behaviour as well as their fixes were:

- Overly stiff knee (i.e. leg overly straightened): Increased penalty for reaching knee joint limits

- Body leans to one side: Increased penalty for body tilt angles

- Agent swings arm around to balance when trying to stand on the spot instead of moving its legs: Increased reward for lifting feet in the air (taking steps) and increased penalty for rapid movement of upper body joints

- Agent moves all its joints wildly, unable to even learn to stand: Decreased entropy term, encouraging more exploitation over exploration, and thus convergence.

Concluding Thoughts

Due to month-long procurement delays, there was insufficient time to try sim2real transfer of the trained policy onto the constructed robot during my internship. However, given the realistic configuration as well as the domain randomisation implemented, I am fairly confident that the policy would have been able to be transferred successfully.

Prior to this, I did not have any experience with NVIDIA Isaac Lab and only had a cursory understanding of RL. On top of that, this was one of the company’s early forays into AI, which meant basically no past work to reference from and no subject matter expert to consult.

Some might find this to be a nightmare scenario, but I absolutely loved it, perhaps because of how oddly familiar it felt to doing robotics in the past. Sure, I had to figure everything out myself but in completely immersing myself in the deep end, I was able to learn rapidly and build up an entire RL training pipeline from scratch.

On a personal note, watching the humanoid agent “come to life” and learning to walk by itself sparked that same awe and excitement I felt the first time I saw a Lego Mindstorms robot following a line, all the way back when I was nine years old. If I was being honest with myself, having done robotics for so many years, it had slowly been losing its lustre. Sure, I was still gaining new specialised knowledge, as well as applying fundamental skills in different contexts, but even though I still enjoyed building robotics systems, it also became something that felt increasingly… dull .

Maybe that was just a normal consequence of a hobby transitioning into a career. Or maybe the decay of robotics’ novelty to me had a half life of 10 years. Regardless, this exposure to RL has reinvigorated my interest in physical AI systems and robotics in general, which is rippling into new personal projects like building my own mini-PC ML workstation and testing out RL approaches to solving various classical controls problems!

Acknowledgements:

- Isaac Lab Project Developers (2025): Baseline environment setup for the velocity task, especially the H1 example. [GitHub]

- Berkeley Humanoid Developers (2024): Reference for a realistic configuration that had successful sim2real transfer. [GitHub]

← Back to projects